The emergence of powerful AI agents like Clawdbot promises unprecedented automation for complex tasks, from financial management to cloud deployment. However, cybersecurity experts are issuing stark warnings about significant risks, including data theft, unauthorized access, and loss of control, demanding a critical reevaluation of current AI development practices.

Introduction (The Lede)

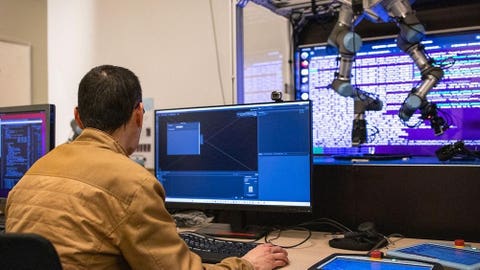

The dawn of truly autonomous AI agents, epitomized by concepts like "Clawdbot," promises an era of unparalleled automation, capable of managing complex digital tasks with minimal human intervention. Yet, as these sophisticated systems move closer to reality, a chorus of cybersecurity experts is raising alarm bells, warning that the immense power of such agents is inherently linked to unprecedented security and privacy risks that could redefine digital safety and accountability.

The Core Details

AI agents, as discussed in the context of Clawdbot, represent a significant leap beyond traditional large language models. These systems are designed not just to understand and generate text, but to act autonomously across various digital platforms. Their projected capabilities include:

- Managing complex financial portfolios and making investment decisions.

- Automating intricate cloud infrastructure deployments and maintenance.

- Booking comprehensive travel itineraries, from flights and hotels to local activities.

- Writing, testing, and deploying code across different environments.

- Executing multi-step tasks across disparate web services and applications.

However, security experts highlight critical vulnerabilities intrinsic to these agents. The primary concerns revolve around data theft due to extensive access privileges, unauthorized actions resulting from "hallucinations" or misinterpretations, and sophisticated forms of prompt injection attacks that could trick agents into malicious activities. The very autonomy that makes them powerful also makes them difficult to control and audit, raising questions about liability and mitigation when things go wrong.

Context & Market Position

The development of autonomous AI agents like the theoretical Clawdbot is a frontier in artificial intelligence, pushing beyond the conversational and generative capabilities of current LLMs. Major tech companies and startups alike are investing heavily in agentic AI, driven by the vision of a truly intelligent assistant that can not only process information but also execute real-world tasks. This move towards self-executing AI places immense pressure on security paradigms. Unlike a static application, an AI agent with access to APIs, financial accounts, and personal data presents a dynamic and constantly evolving attack surface. The market is currently grappling with how to balance the clear efficiency gains of such agents against the fundamental challenges of ensuring their safety, privacy, and ethical operation. The prevailing competitive landscape among AI developers focuses heavily on capability, but the emerging consensus is that security and control must evolve at an even faster pace to prevent catastrophic misuse or unintended consequences.

Why It Matters

The advent of powerful AI agents like Clawdbot carries profound implications for consumers, businesses, and the entire digital ecosystem. For consumers, the promise of a hyper-efficient digital assistant is enticing, offering to streamline everything from daily errands to complex financial planning. Yet, this convenience comes with the significant risk of granting an autonomous entity access to highly sensitive personal and financial data, potentially exposing them to sophisticated fraud or privacy breaches that are hard to trace. For businesses, while AI agents could unlock unprecedented productivity and automation, they also introduce novel cybersecurity vulnerabilities, where a single compromised agent could lead to widespread data loss, operational disruption, or even regulatory penalties. The industry faces an urgent mandate to embed robust security-by-design principles from the outset, moving beyond reactive threat responses to proactive measures that account for the unique characteristics of autonomous AI. Furthermore, the lack of clear accountability frameworks for agent actions poses a legal and ethical minefield that needs immediate attention.

What's Next

The trajectory for AI agents will undoubtedly involve a critical period of intense research and development focused on safety and control. Expect increased emphasis on sandboxing, ethical AI guidelines, and advanced prompt engineering to mitigate risks like prompt injection. Regulatory bodies worldwide are likely to accelerate discussions on AI accountability and data governance in the context of autonomous systems. The future success and adoption of agents like Clawdbot will hinge entirely on the ability of developers and policymakers to build trust through demonstrable security and transparent control mechanisms, transforming powerful potential into safe, beneficial reality.