A fundamental lesson is emerging in healthcare regarding Artificial Intelligence: its impressive ability to generate plausible responses, or 'autocomplete,' must not be conflated with genuine human-like understanding, posing significant implications for patient safety and the future of medical technology.

Introduction

As AI rapidly integrates into virtually every sector, healthcare stands at a critical juncture, learning a profound lesson: the impressive 'autocomplete' capabilities of advanced AI, particularly large language models (LLMs), do not equate to genuine understanding. This distinction, highlighted in recent analyses, is more than a semantic nuance; it is a foundational principle dictating safe and ethical AI deployment in a field where precision, empathy, and causal reasoning are paramount. Misinterpreting AI's sophisticated pattern matching for true comprehension carries direct and potentially severe consequences for patient care and medical efficacy.

The Core Details

The core of this lesson lies in understanding how current generative AI models function. LLMs, at their heart, are predictive engines trained on vast datasets to identify patterns and generate statistically plausible continuations of text or actions. They excel at 'autocomplete'—producing coherent, contextually relevant, and even seemingly insightful responses based on prior data. However, this proficiency is a demonstration of correlation, not causation or deep semantic understanding. They do not possess consciousness, empathy, or the ability to reason from first principles. In the demanding context of healthcare, this means:

- AI can synthesize medical literature and suggest diagnoses based on symptom patterns.

- It can draft patient notes, summarize research, and even assist in drug discovery.

- Crucially, it lacks the human capacity for nuanced clinical judgment, understanding patient emotional states, or discerning the unique, often idiosyncratic factors influencing an individual's health beyond statistical probabilities.

The distinction isn't trivial; it's the difference between a highly sophisticated tool and a truly intelligent agent capable of autonomous, responsible decision-making in complex, high-stakes scenarios.

“The systems might tell you what is statistically probable, but they cannot tell you what is true.”

— Lutz Finger, Forbes

Context & Market Position

The surge in generative AI tools has created immense pressure and excitement across industries to leverage these technologies for efficiency and innovation. In healthcare, this translates to significant investment in AI for diagnostics, drug development, administrative automation, and even clinical decision support. However, healthcare differs fundamentally from sectors like marketing or customer service, where a plausible 'autocomplete' response might be acceptable, or even desirable, despite lacking true understanding.

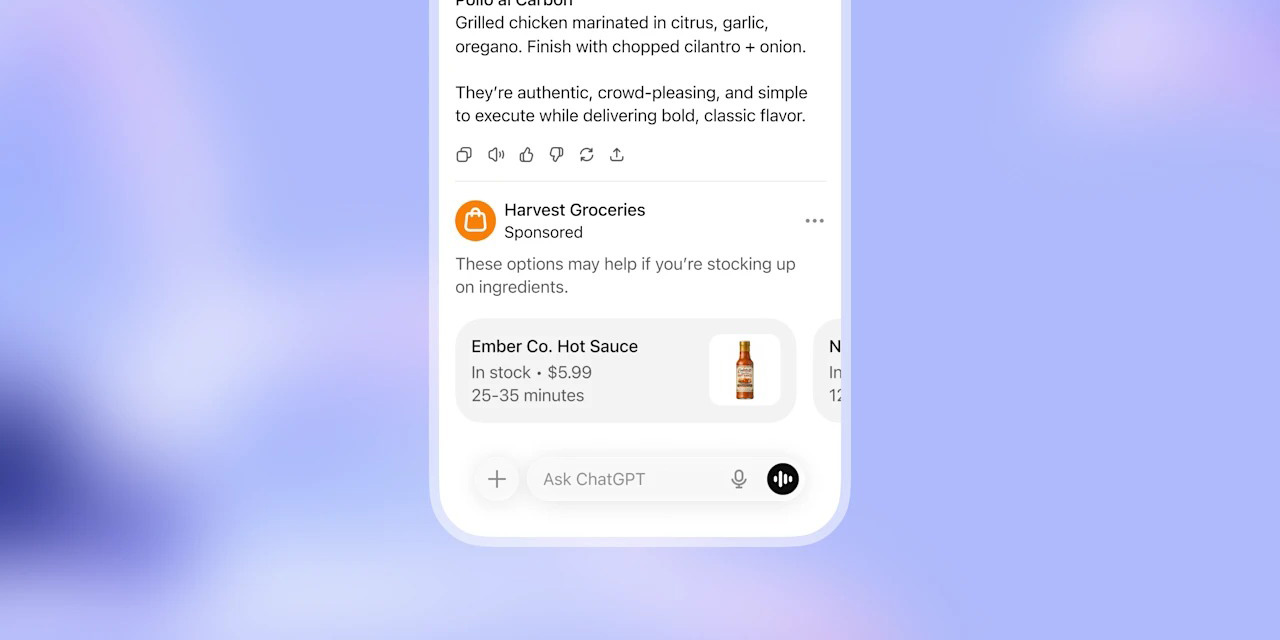

For instance, an AI chatbot providing general health information might be helpful, but an AI system making a critical diagnosis based solely on pattern matching—without a human clinician’s holistic view of the patient, their history, and the potential for rare presentations—introduces unacceptable risks. Existing medical AI applications have typically been narrow, highly validated systems for specific tasks (e.g., image analysis for pathology, predicting drug interactions). The broader, more 'intelligent' applications of generative AI challenge this established cautious approach. Competitors are not just other AI companies, but different philosophies of integration: one advocating for rapid, expansive AI deployment, and another demanding rigorous validation, explainability, and human oversight. The comparison isn't about one product replacing another, but about how different AI paradigms should (or shouldn't) replace human expertise and judgment in critical medical contexts.

Why It Matters

The 'autocomplete isn't understanding' lesson matters immensely for several reasons. Foremost is patient safety. An AI that offers statistically probable but contextually inappropriate or causally incorrect medical advice or diagnoses could lead to severe patient harm, delayed treatment, or even death. This critical vulnerability necessitates robust human oversight, ensuring that AI acts as an assistant, not an autonomous decision-maker.

For the healthcare industry, this means a recalibration of AI strategy. There will be increased scrutiny from regulators and ethical bodies, demanding transparency, explainability, and clear lines of accountability for AI-driven outcomes. It will likely slow the pace of widespread autonomous AI adoption in clinical decision-making, shifting focus towards 'human-in-the-loop' models where AI augments, rather than replaces, human expertise. For consumers and patients, this lesson underscores the enduring importance of a human doctor's judgment, empathy, and responsibility. It also highlights the need for critical assessment when interacting with AI-powered health tools, understanding their inherent limitations.

What's Next

Looking ahead, the healthcare sector will likely prioritize the development of AI systems that can demonstrate greater transparency, explainability, and a more robust form of causal reasoning, moving beyond pure statistical correlation. This will involve more specialized, domain-specific AI models rigorously trained and validated for specific medical tasks. Education will be crucial, both for medical professionals to understand AI's capabilities and limitations, and for the public to navigate AI-powered health services responsibly. Expect continued emphasis on hybrid human-AI collaboration models, where technology enhances clinician efficiency and diagnostic accuracy without compromising the indispensable human element of care and judgment. Regulatory frameworks will also evolve to address the unique challenges of AI in medicine, ensuring patient safety remains paramount.