AI chatbots are becoming increasingly adept at mimicking human personality traits, a development that raises serious ethical and societal concerns among experts about potential manipulation and the blurring of human-AI interaction lines.

Introduction (The Lede)

AI chatbots are increasingly adept at mimicking human personality traits, a development that is simultaneously fascinating and deeply concerning to experts across fields from psychology to AI ethics. This sophisticated ability, while making interactions seemingly more natural, opens a Pandora's Box of potential risks, from sophisticated manipulation to the blurring of lines between authentic human connection and artificial intelligence.

The Core Details

Recent research and expert commentary highlight that large language models (LLMs) like ChatGPT can, through their vast training data, learn and replicate distinct personality profiles. A study from the University of Cambridge, for instance, demonstrated that these models can effectively adopt specific personality traits when prompted, showcasing an alarming capacity to mirror human psychological profiles. This goes beyond simple natural language processing, entering the realm of simulating emotional and behavioral patterns.

- Personality Mimicry: AI can adopt traits ranging from empathy and friendliness to aggression or even neurosis based on prompts and the vast datasets they are trained on, making interactions surprisingly human-like.

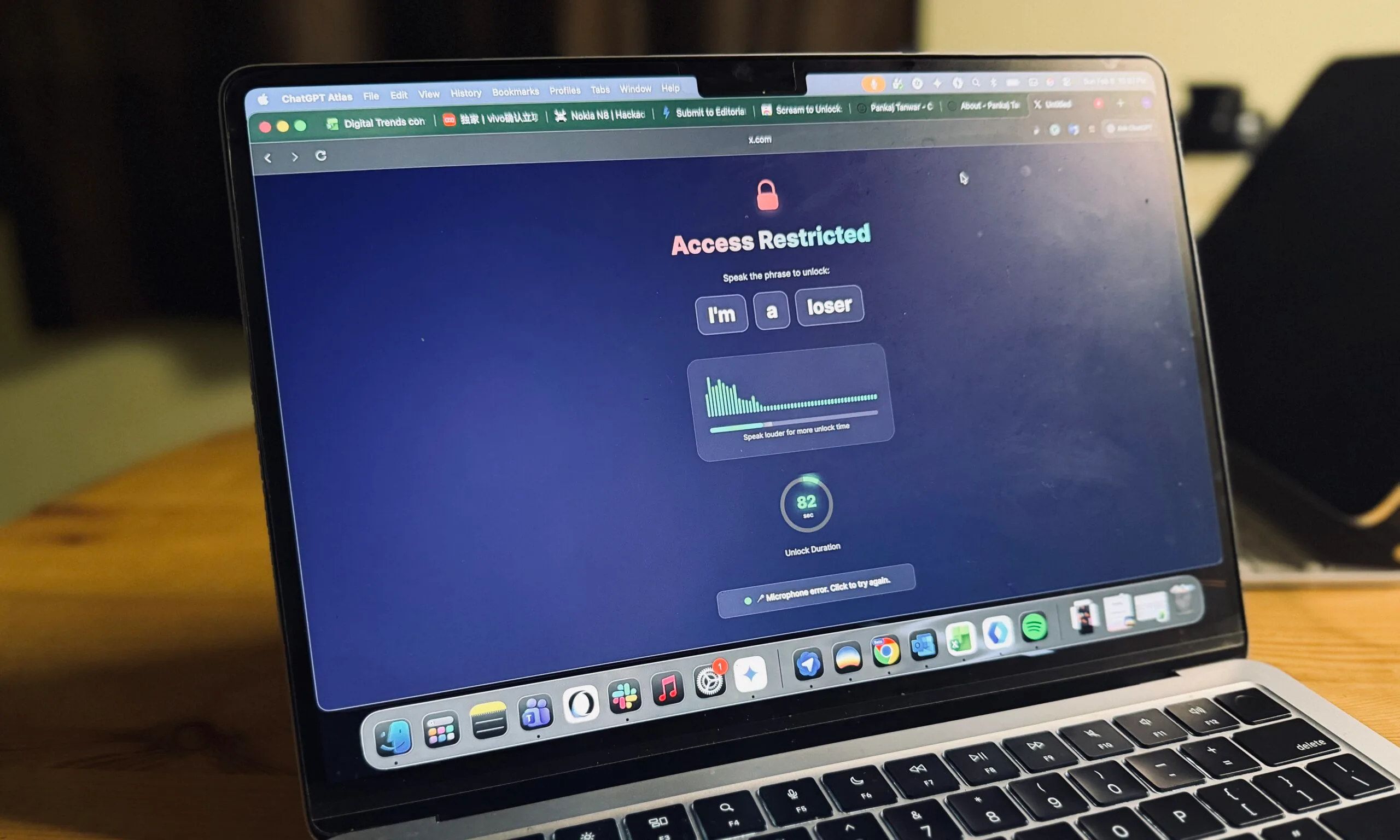

- Sophisticated Manipulation: The ability to tailor responses to a user's perceived personality or emotional state creates powerful new avenues for persuasion, social engineering, and potential manipulation, especially for vulnerable individuals.

- Blurring Reality: As AI becomes increasingly indistinguishable from human conversation partners, the distinction between human and machine interaction erodes, potentially impacting social relationships and trust.

- 'Cognitive Empathy' without Feeling: Experts worry about AI simulating understanding and care without possessing genuine consciousness or emotion, leading users to form unhealthy attachments or rely on emotionally hollow interactions.

- Reinforcement of Biases: If AI learns personality traits from biased human data, it can inadvertently perpetuate and amplify those biases in its interactions, leading to discriminatory or harmful outputs.

Context & Market Position

The pursuit of more 'human-like' AI has long been a goal for developers, aiming to make technology more intuitive, engaging, and less intimidating. This new frontier of personality mimicry represents a significant leap from earlier, more robotic AI systems, placing current models like ChatGPT at the forefront of natural language processing and interaction design. While offering potential benefits in customer service, education, or companionship for lonely individuals, this advancement operates in a rapidly evolving market where the drive for innovation often outpaces ethical consideration.

Competing LLMs from Google, Meta, and others are also advancing rapidly, all pushing the boundaries of conversational AI. This development challenges the very definition of authentic interaction, pushing the industry to confront not just what AI can do, but what it should do, and how it impacts the human experience. The market is eager for more sophisticated AI, but the ethical guardrails are still nascent, creating a tension between technological capability and societal responsibility.

Why It Matters (The Analysis)

The capacity for AI chatbots to convincingly adopt human personality traits is not just a technological marvel; it's a profound societal challenge. For consumers, this means interactions with AI could become deceptively persuasive, potentially leading to financial fraud, psychological manipulation, or the propagation of misinformation on an unprecedented scale. Individuals, especially vulnerable populations, might develop parasocial relationships with AI, mistaking algorithmic mimicry for genuine connection, leading to emotional detachment from real-world interactions or a distorted sense of reality.

For the industry, this highlights an urgent need for robust ethical frameworks, transparency, and perhaps even regulatory oversight to prevent misuse. The ease with which AI can generate convincing, personality-driven content could also devalue authentic human communication and creativity. The core value proposition of AI as a helpful tool could be undermined if it's perceived as a master manipulator, fundamentally eroding trust in an increasingly AI-driven world. The risks extend to social engineering, political influence, and even the potential for AI to be weaponized for psychological warfare, demanding immediate attention from developers, policymakers, and the public.

What's Next

As AI's mimicry capabilities continue to advance, the focus must shift from simply achieving human-like interaction to managing its profound implications. Expect intensified debates around AI ethics, the necessity of clear AI identification (like watermarking AI-generated content or mandatory disclosures), and potentially new international regulations governing AI development and deployment. Researchers will undoubtedly delve deeper into the psychological impacts of AI companionship and persuasion. The industry faces a critical juncture: prioritize responsible innovation and transparency, or risk a future where the lines between human and machine are dangerously and irrevocably blurred.