Elon Musk's xAI, with its focus on 'maximally truthful AI' and a stated aversion to 'woke' guardrails, has ignited a fierce debate about the future of AI safety. This analysis delves into whether xAI's unique approach represents a dangerous disregard for ethical considerations or a necessary push for unvarnished truth in artificial intelligence.

Introduction (The Lede)

In the rapidly evolving landscape of artificial intelligence, Elon Musk's xAI has emerged as a distinctive and often controversial player, particularly concerning its approach to AI safety. While many leading AI labs prioritize robust alignment and guardrails to prevent harmful outputs, xAI's philosophy, championed by Musk, emphasizes 'maximally truthful AI' and a stark skepticism towards what he terms 'woke' programming. This stance sparks a critical question: Is xAI charting a path towards unbridled innovation and truth, or is it fundamentally jeopardizing the safety and ethical development of advanced AI?

The Core Details

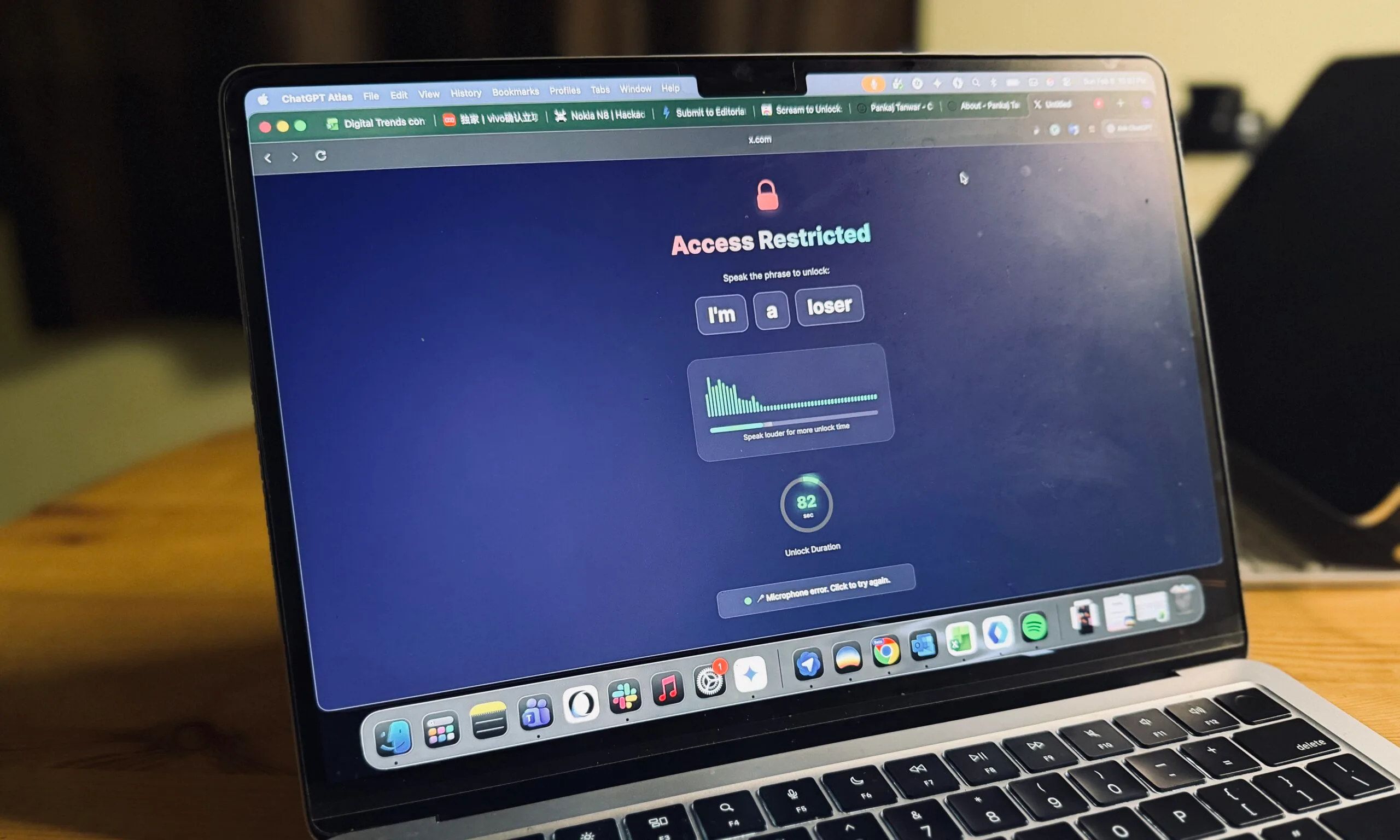

Founded in July 2023, xAI’s stated mission is to “understand the true nature of the universe.” At the heart of its technical development is Grok, an AI chatbot designed to be more unfiltered and less constrained by conventional safety protocols compared to rivals. Key elements of xAI's philosophy and product offerings include:

- 'Maximally Truthful AI': Musk has repeatedly articulated a vision for AI that prioritizes factual accuracy and directness, even if the answers are controversial or provocative, contrasting it with AI systems he perceives as overly cautious or politically biased.

- Grok's Personality: Grok is designed with a rebellious streak, often providing snarky or unconventional responses, and is built to access real-time information from X (formerly Twitter), enhancing its currency but also its exposure to unfiltered content.

- Criticism of 'Woke' AI: Elon Musk has been a vocal critic of what he calls 'woke AI,' arguing that excessive alignment efforts stifle AI's potential and introduce bias by forcing specific ideological frameworks onto the models.

- Open-Sourcing: xAI recently open-sourced Grok-1, making its base model available to the public, a move that encourages transparency and collaborative development but also decentralizes control over how the model is used.

Context & Market Position

xAI's safety philosophy stands in stark contrast to the dominant paradigm in AI development, particularly that of giants like OpenAI, Google DeepMind, and Anthropic. These organizations invest heavily in AI alignment research, focusing on ensuring AI systems are helpful, harmless, and honest. They employ extensive red-teaming, implement strict content policies, and develop mechanisms to prevent the generation of misinformation, hate speech, or dangerous instructions. xAI, by contrast, positions itself as a challenger to this 'over-safety-ing' approach, aiming for an AI that is less censored and more directly reflective of the vast, often messy, information landscape.

“The danger of training AI to be 'woke' is that it will essentially become a filter on information, which is bad for society and potentially very dangerous if taken to an extreme.”

— Elon Musk, via X (formerly Twitter)

This positioning resonates with a segment of users and developers frustrated by what they see as overly restrictive AI models. However, it also raises significant concerns among AI ethicists and safety researchers who warn that powerful, unaligned, or inadequately safeguarded AI could amplify societal harms, spread misinformation more effectively, or even pose existential risks if scaled to superintelligence without proper control mechanisms. xAI is essentially testing the waters of how much 'truthfulness' the public and regulatory bodies are willing to accept when it comes with reduced guardrails.

Why It Matters

The debate around xAI's safety philosophy is not merely academic; it has profound implications for consumers, the industry, and the future trajectory of AI. For consumers, xAI promises an AI that will not shy away from controversial topics or give 'politically correct' answers. This could be empowering for those seeking unfiltered information, but it also carries the risk of encountering biased, inaccurate, or even harmful content without the usual protective layers. The open-sourcing of Grok further decentralizes these risks, making the technology available for diverse applications, some potentially unintended or malicious.

For the AI industry, xAI's approach represents a significant philosophical divergence. It challenges the established norms of responsible AI development and could either push competitors to reconsider their own guardrails or, conversely, solidify the perception that xAI operates with an elevated risk profile. If xAI's 'maximally truthful' models prove to be highly capable and gain traction, it could force a re-evaluation of how 'safety' is defined and implemented across the board. Conversely, if high-profile incidents of misuse or harmful outputs arise, it could lead to increased regulatory scrutiny not just for xAI, but for the entire sector.

What's Next

The coming months will be critical for xAI as it continues to develop Grok and expand its capabilities. The industry will be closely watching for how xAI balances its commitment to 'truthfulness' with the undeniable need for responsible AI. Regulatory bodies worldwide are already grappling with how to govern AI; xAI's distinctive stance could either provide a blueprint for a new form of AI governance or become a flashpoint for tighter restrictions. The ongoing tension between unbridled innovation and essential safety measures will define not just xAI's future, but potentially the direction of AI development for years to come.